Seeing with the Hands

A sensory substitution that supports manual interactions

Our team at the University of Chicago engineered a wearable device that enables users to see with their hands when hovering over objects. This is a sensory substitution device that assists with perceiving objects by translating one modality (e.g., vision) into another (e.g., tactile), developed to the need of Blind and Low Vision users. The user can feel tactile images rendered on their hands using an electrotactile display from a miniature camera mounted on the palmar side of their hands. This new perspective of seeing allows users to leverage the hands’ affordance—hand faces towards objects to grasp allows hand preshaping, and flexibility—hands move rapidly, reaching from multiple angles, exploring tight spaces, circling occluded objects, etc. This opens a new possibility to support manual tasks in everyday life without vision.

Video showcase

A new tactile perspective designed for grasping objects

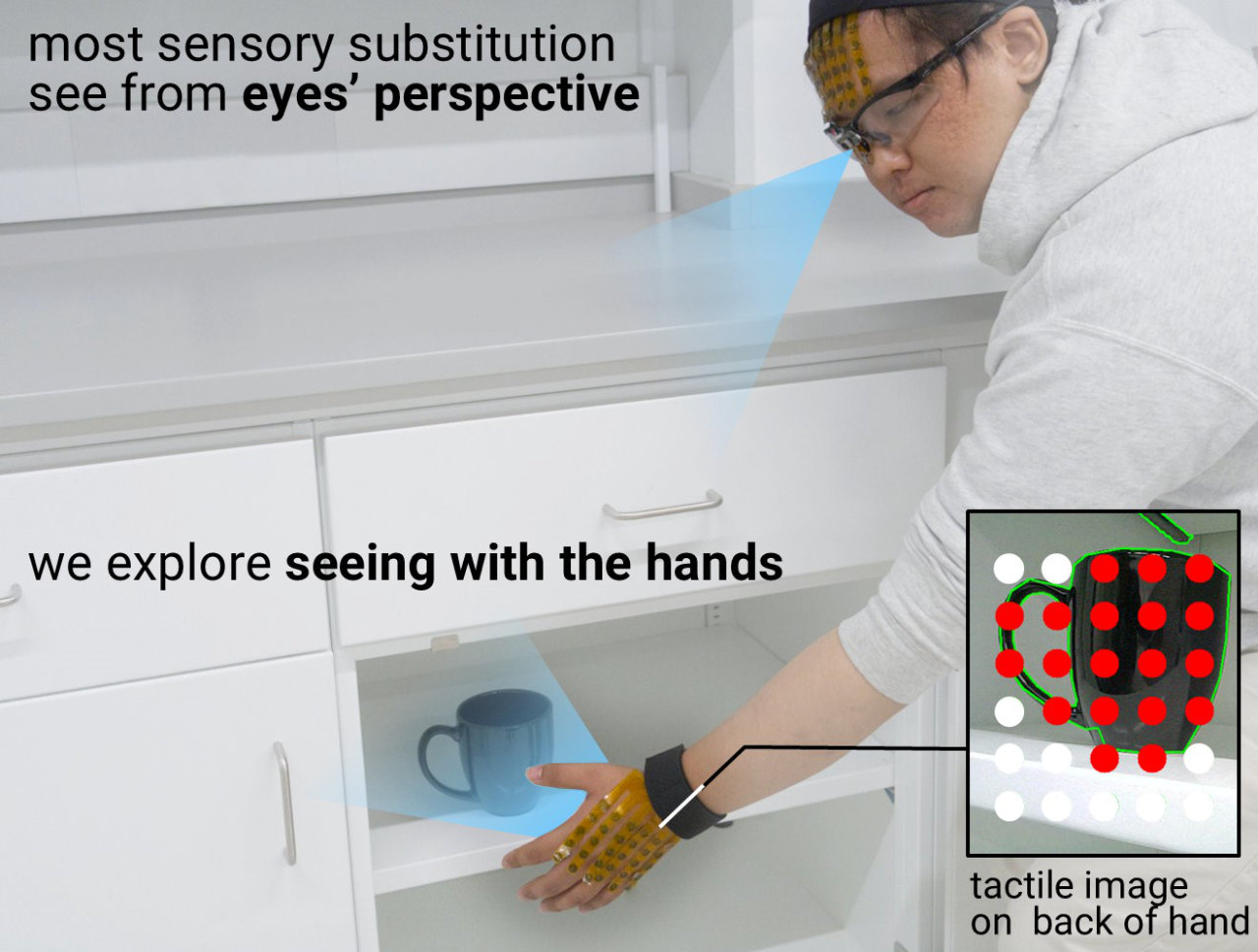

Typical sensory substitution interfaces are thought to substitute the eyes for assisting with perceiving one’s surroundings (e.g., navigation). We explore a new tactile perspective with cameras on the palmar side—This is the side of the hand facing toward objects to grasp for hand manipulation.

Typical sensory substitution interfaces are thought to substitute the eyes for assisting with perceiving one’s surroundings (e.g., navigation). We explore a new tactile perspective with cameras on the palmar side—This is the side of the hand facing toward objects to grasp for hand manipulation.

Our study participants envision using our device for various manual tasks, such as finding the handrail while walking down the stairs, identifying and grabbing ingredients inside a shelf while cooking, retrieving an object that fell on the floor, etc.

Our study participants envision using our device for various manual tasks, such as finding the handrail while walking down the stairs, identifying and grabbing ingredients inside a shelf while cooking, retrieving an object that fell on the floor, etc.

How it works

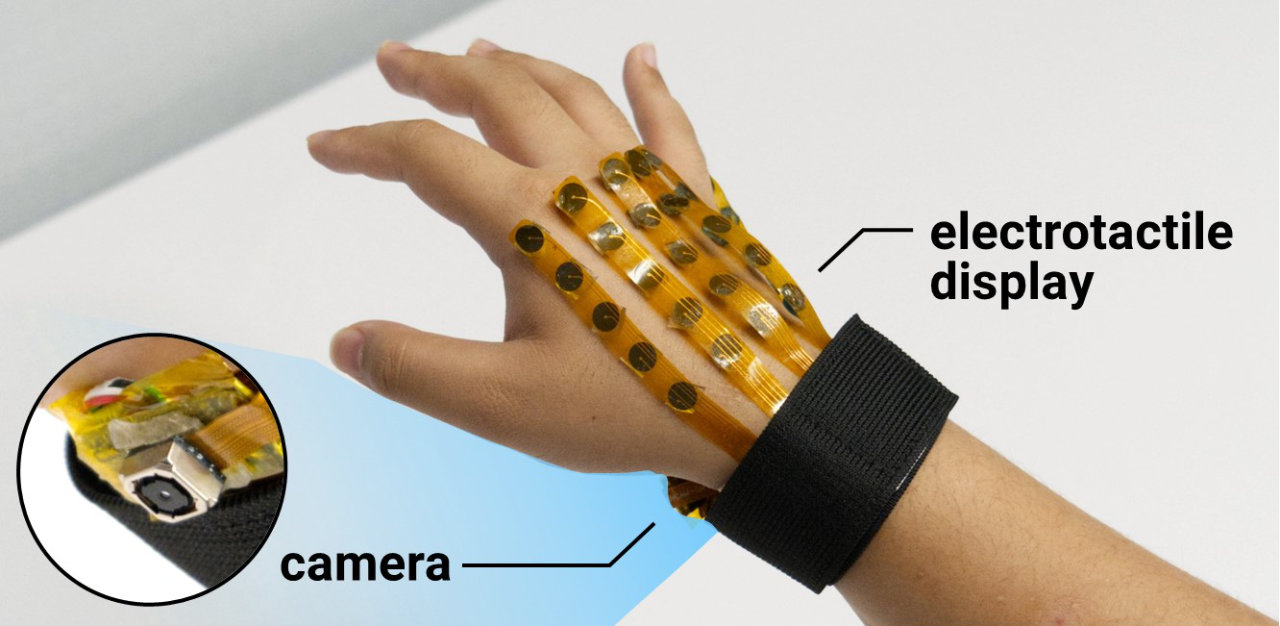

The image under the hand captured by the camera is segmented and translated into tactile patterns, using computer vision techniques, in real-time. The tactile patterns are felt on the back of the hand through a custom electrotactile display (5×6) that is flexible and thin (0.1 mm).

The image under the hand captured by the camera is segmented and translated into tactile patterns, using computer vision techniques, in real-time. The tactile patterns are felt on the back of the hand through a custom electrotactile display (5×6) that is flexible and thin (0.1 mm).

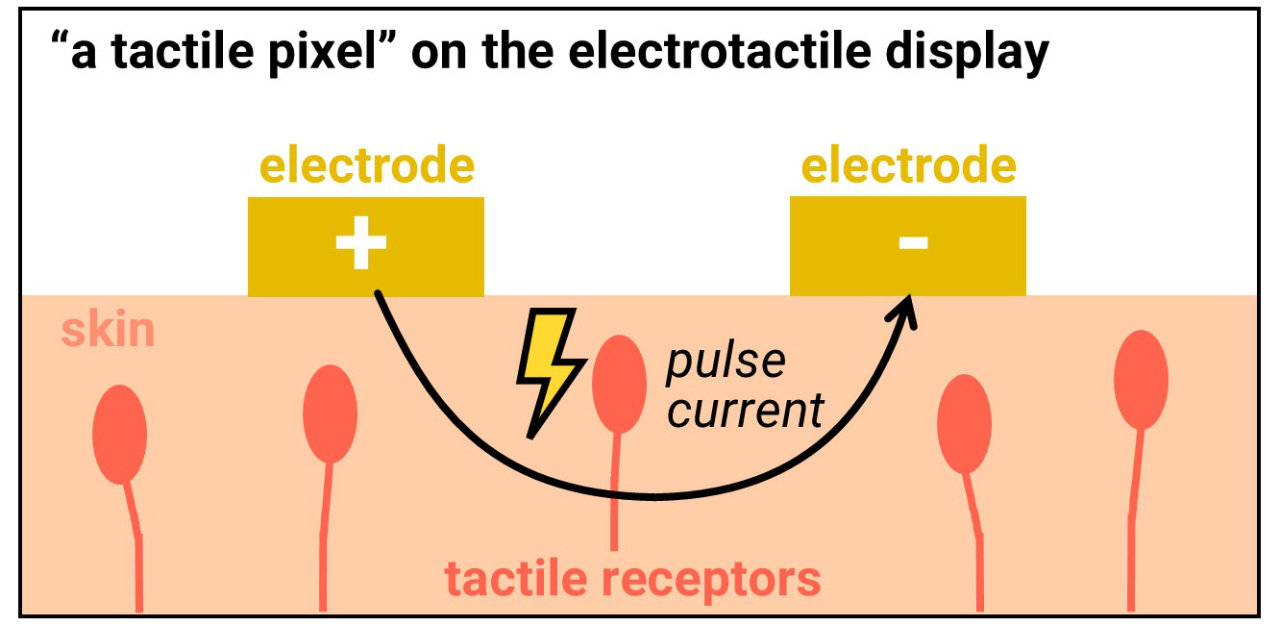

Each "tactile pixel" on the electrotactile display consists of a pair of electrodes. Whenever the pixel is supposed to be felt, our system passes tiny current pulses (3-5 mA) between the electrodes, which stimulate the tactile receptors under the skin, causing a sense of slight touch.

Each "tactile pixel" on the electrotactile display consists of a pair of electrodes. Whenever the pixel is supposed to be felt, our system passes tiny current pulses (3-5 mA) between the electrodes, which stimulate the tactile receptors under the skin, causing a sense of slight touch.

User study

Our user studies including Blind & Low Vision participants focus on daily tasks (e.g., grasping objects). Without extensive training (~15 min), most participants completed not having to touch unintended objects. Compared to using the eyes' perspective, participants found the hands' provides a more ergonomic way of seeing.

Our user studies including Blind & Low Vision participants focus on daily tasks (e.g., grasping objects). Without extensive training (~15 min), most participants completed not having to touch unintended objects. Compared to using the eyes' perspective, participants found the hands' provides a more ergonomic way of seeing.

We further let participants choose which tactile perspective to use for a handshaking task. All chose to use both devices, and most stated that the eyes' provides an overview, and the hands' provides details. We were really impressed by how they were able to fluently utilize these new tactile senses!

We further let participants choose which tactile perspective to use for a handshaking task. All chose to use both devices, and most stated that the eyes' provides an overview, and the hands' provides details. We were really impressed by how they were able to fluently utilize these new tactile senses!

Team

This is a research from Human-Computer Integration Lab at the University of Chicago by:

Publication

This work is published at ACM CHI 2025 (see paper on ACM DL). Authors’ print is available here (PDF).

Press kit

High resolution photos and unannotated video are available here.

Source code

Source code is available here.

Funding source

This research is supported by Google Award for Inclusion Research.

University of Chicago | Computer Science